Cognitive Operating Systems

Abstracting the essense of interactive experiences

Language Learning Models (LLMs) are a powerful technology that have been evolving since the mid-1980s. As increases in size and better architectures have developed, emergent behavior has arisen, giving us a preview of the impact this technology will have on our world. In this article, I will discuss the emergent behavior of LLMs that resemble operating systems and my predictions of the secondary consequences that may occur (or at least be explored).

Emergent behavior arises when the activity of a system becomes frequent enough that human measurement can perceive a new entity and nature of action. It does not introduce anything new in reality but rather introduces something new to our perceptive faculties; it’s a discovery. Specific behaviors we’ve seen in the domain of LLMs include:

In-context Learning: Models can learn tasks during inference with no additional training.

Zero-shot/Few-shot Capabilities: Performing tasks without specific training or with minimal examples due to generalized patterns of action.

Multimodal Understanding: Applicability across multiple data types (e.g., text and images).

Complex Reasoning: Solving problems involving multi-step logic and explanations.

Self-correction: Revising outputs based on feedback or errors in prior responses.

Today, as many people take in the emergent behavior of LLMs, some have adopted a new perspective of them as pattern identifiers and pattern generators that can iteratively transform indefinitely. This has led some to draw similarities with other systems that continuously transform patterns into other patterns indefinitely: operating systems.

Comparisons to Operating Systems

Let’s first discuss our existing concept of an operating system and why this comparison feels justified. What is an operating system in a general sense? It is a system that has access to linear memory. It can read pieces of that memory and perform actions that further read or write back to it. These memory locations are connected to hardware that is actively running and also writes to these places in linear memory. The contents of this memory might represent the pixels on your screen, the position of your mouse, or data to send to your Wi-Fi card. An operating system interacts with these memory locations indefinitely, so quickly that it creates an experience that seems interactive to our human senses.

Today, we experience a similar effect in ChatGPT. The text we enter into ChatGPT is a kind of linear memory. ChatGPT reads this memory pattern and writes to it when it generates results. This memory’s encoded data is visible to us through our monitor as text characters on our screen. We indefinitely keep entering data, expanding upon the results of the text and generating new patterns. What arises from this is the feeling of something interactive.

What differs between these two interactions? Firstly, the speed of transformations. Operating system transformations are mathematically similar to AI, but they are far more simplistic transformations that typically read or modify a single location of memory ( adding, multiplying, moving numbers). Thus, an operating system can perform transformations to small bits of memory very quickly. LLMs, on the other hand, use matrix multiplication to modify vast sequences of linear memory and perform operations on all the elements simultaneously. This can be especially fast so long as your elements all require the same operation.

There is also a significant difference in the types of patterns that can be worked with. LLMs today work with very particular kinds of patterns. This data might be text, images, etc., encoded in a particular manner. LLMs are specialized to this data due to their creation through massive amounts of training steps on consistently structured data. Operating systems, on the other hand, tend to transform a wide variety of structures. Code could transform specific structures for hardware (like the protocol for communicating with USB drives) or very specific structures that only a human knows are meaningful.

Transformations Forever

Despite these differences, LLM matrix math and human-written code are fundamentally just transformations that can run indefinitely.

Consider a simple system with memory representing mouse velocity and other linear memory representing pixels on a screen. If we wanted to implement an experience where your mouse cursor displayed appropriately as you moved the mouse, we could easily write code that transforms mouse movement velocity in memory and translates it into pixels in the screen’s memory. We could also do this with LLMs. If we had a trained LLM take in mouse movement and the current screen’s pixels, it could generate a new sequence of encoded data in memory of what the next new screen’s pixels should look like.

These two implementations of transformations to generate human output are not the same mathematically, but they could, to the degree humans are able to measure visually, produce similar experiences of interactivity. The LLM could act as a form of compressing the understanding of how mouse and screen data transform into new screen data over time.

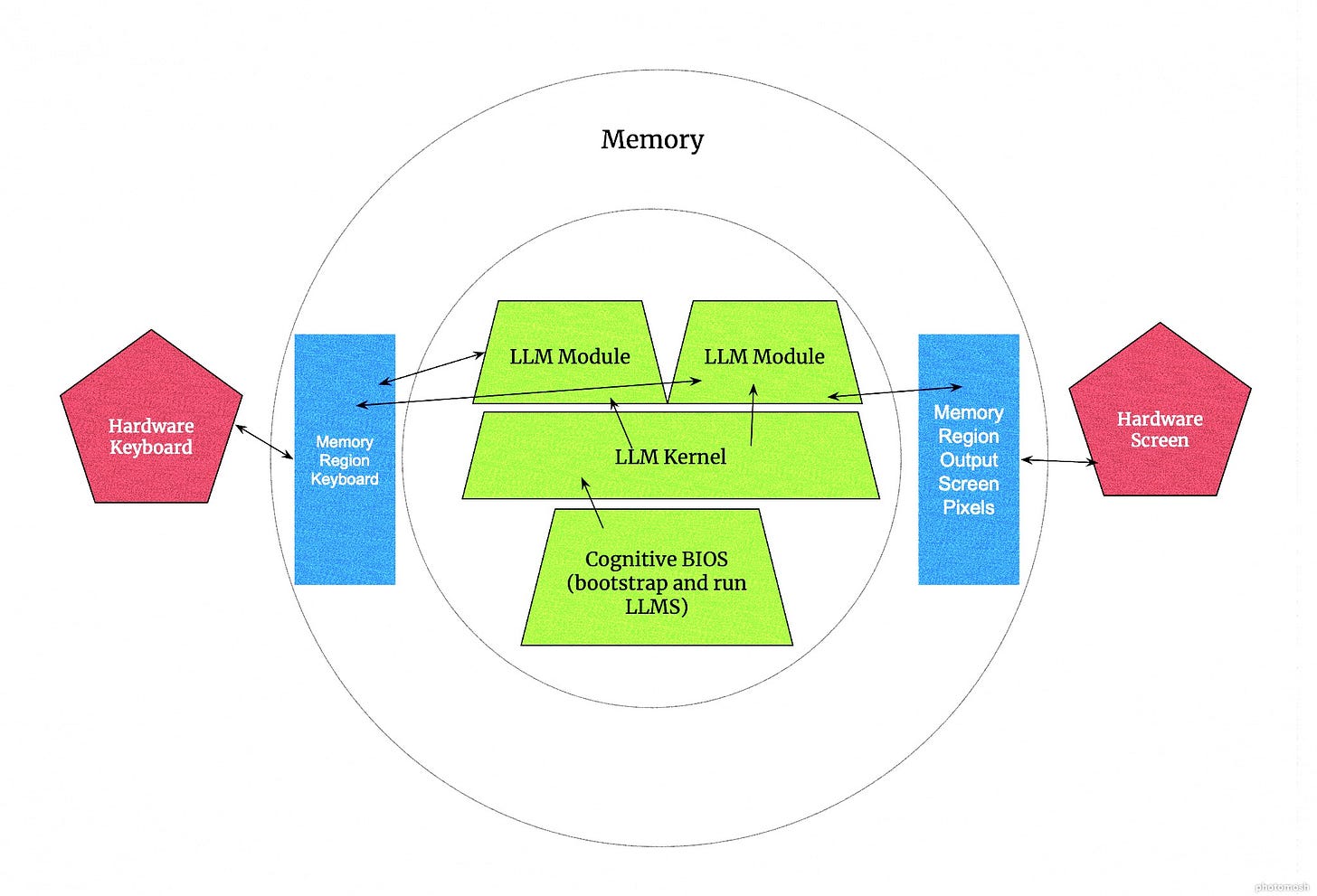

This simple program can be expanded into a system of multiple concurrent subsystems. An operating system kernel is a type of program responsible for multiple programs (modules) that are transforming linear memory. Similarly, we could imagine a set of many LLMs that are simultaneously transforming pieces of memory concurrently under some guiding program.

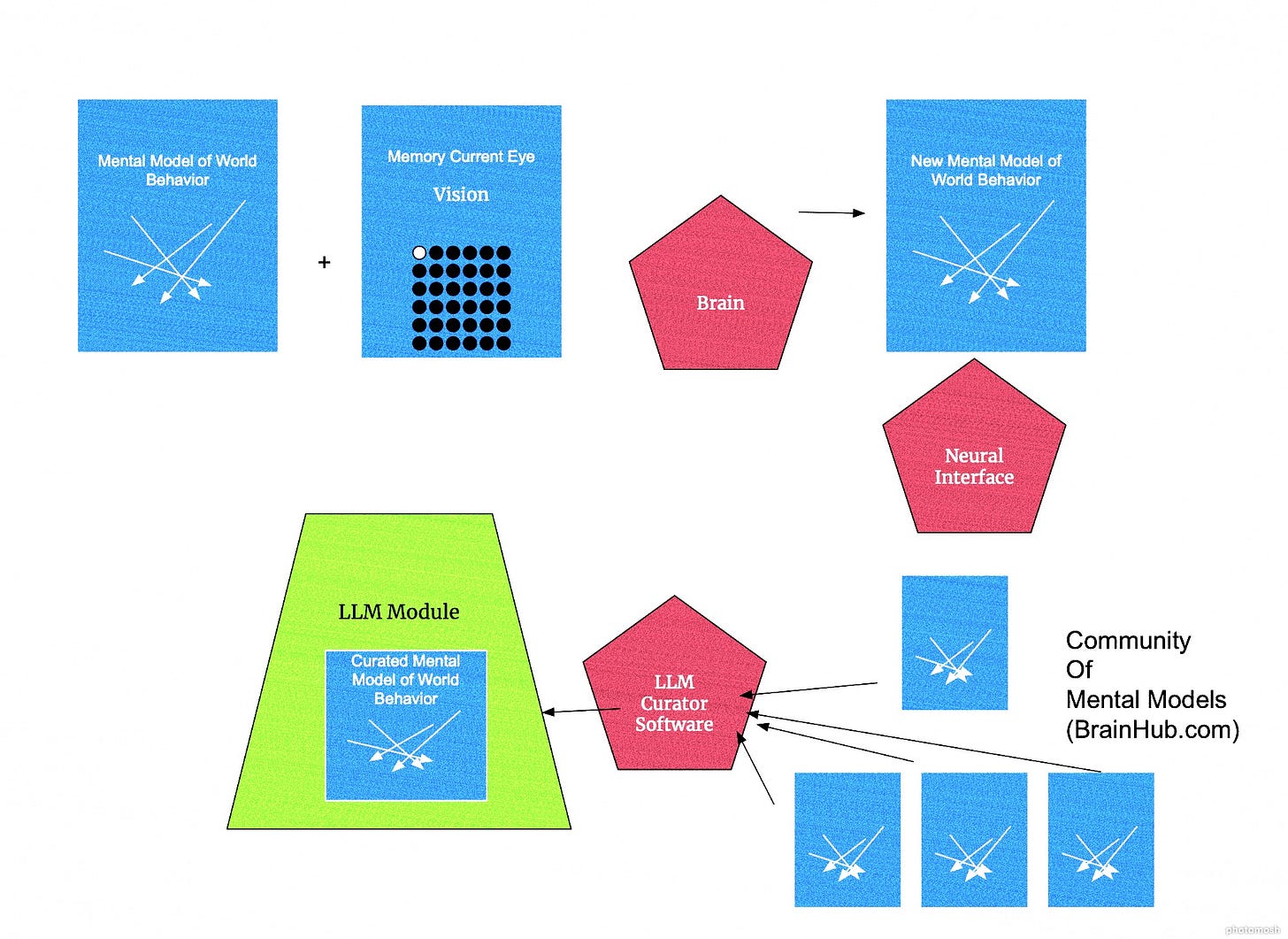

I term this concept of orchestrating LLM pattern transformers a Cognitive Operating System, as it functions similarly to the more general concept of an operating system but uses cognitive compression techniques akin to our own brain. Much like our brain sees interactive experiences and replays simulations of how new interactive situations would occur, LLMs can capture interactive experiences during training. Just like the human mind, which does not store every pixel of our retina but rather a compressed model of what we’ve seen, so too does the LLM.

BIOS for Brains

The maturation of Cognitive Operating Systems would probably require several innovations in the software industry. The first is an orchestrator that manages the memory regions that would be read and written to by LLMs. These LLMs would need some sort of mechanism for operating concurrently, likely a scheduler of sorts, that could iteratively transform all the various kinds of patterns that might be involved in a system and handle conflicts. The first implentations of these will likely be toy demos such as the cursor example above or video games, which require only a very limited set of linear memories useful for gamers.

As time goes on, though, this set of memories might grow to include all the kinds of hardware that traditional operating systems typically interact with: storage mechanisms, the internet, etc. You’d need more complex systems to manage the execution of all these LLMs together. It’s likely that an LLM will form specifically for the task of orchestrating other LLMs to create a particular experience for a human. LLMs could communicate their dependencies to one another to load into memory what’s required, much like how Linux loads into memory modules appropriate to the user’s actual hardware and configurations.

If the scheduler is itself an LLM, this begs the question of who starts the scheduler? What’s the equivalent of BIOS that even gets an LLM scheduler going? Typically such things would be code baked into the hardware itself. What might develop is hardware that not only allows the bootstrapping and running of LLMs directly but also the ability to reconfigure its physical structure.

In much the same way that software programs can download new versions of themselves from the internet, LLMs might be able to generate patterns that download new versions of their weights, or possibly even download new firmware into their host hardware that runs the LLMs. But this firmware might be more than code one day; it might actually be the physical configuration of dynamic hardware like FPGAs that physically changes its logic gates. LLMs might be able to one day redesign the hardware they run on by interpreting improvements they want to make into the pattern of their own hardware configuration language.

Likely for some time, though, this will be something humans excel at and will manage standards for. Traditional programming in a world of Cognitive Operating Systems might be relegated to something akin to VHDL for LLMs, shifting focus to the hardware itself rather than the interactive experience.

Who Will Build the Future?

Who will be developing on this new Cognitive Operating System if traditional coding is relegated to hardware? I believe a new type of developer will emerge—a type of neuroarchitect, specifically skilled at creating cognitive compression of experiences into LLM modules that run on Cognitive Operating Systems.

These individuals might initially resemble traditional machine learning developers, gathering data sets and creating matrix weights, but I suspect our industry will evolve to develop new methods of training. We might utilize neural interfaces that directly translate human experiences into the encoded data used in the transformations of LLMs. If someone is putting together a multi-modal LLM for tic-tac-toe, they might physically operate the game, focus their vision on it, and record those interactive patterns into an LLM that can recreate it in pixel form. These patterns might not even need to be actively generated; rather, we might be gathering these patterns all the time throughout our lives and filtering them later to form a novel module. Possibly even just imagining patterns might be enough.

New forms of collaboration could arise among people as they pool together their resources, much like the current open-source community. Imagine players of a game pooling together their digitally encoded memories of the game mechanics and lore to create an experience generator LLM module to distribute to the world for others to play. Perceptual collaboration could take place on a platforms where people can inspect, remix, and share their experiences.

History Rhymes

I’ll conclude this article by saying confidently that I have no idea what the future will bring in total. That said, there’s an apt quote: “History doesn’t repeat itself, but it does rhyme.” It is undeniable that the abstraction of LLMs as pattern transformers of linear memory shares aspects with traditional software operating systems. As hardware and AI algorithms drive LLMs faster and faster, how far will the consequences of that similarity bring LLMs down a similar path to traditional operating systems? We’ll just have to wait and see. At the very least, I'm certain many similar utilizations will be attempted. Whatever happens, please MIT license your brainwaves—it’s going to be a wild ride.